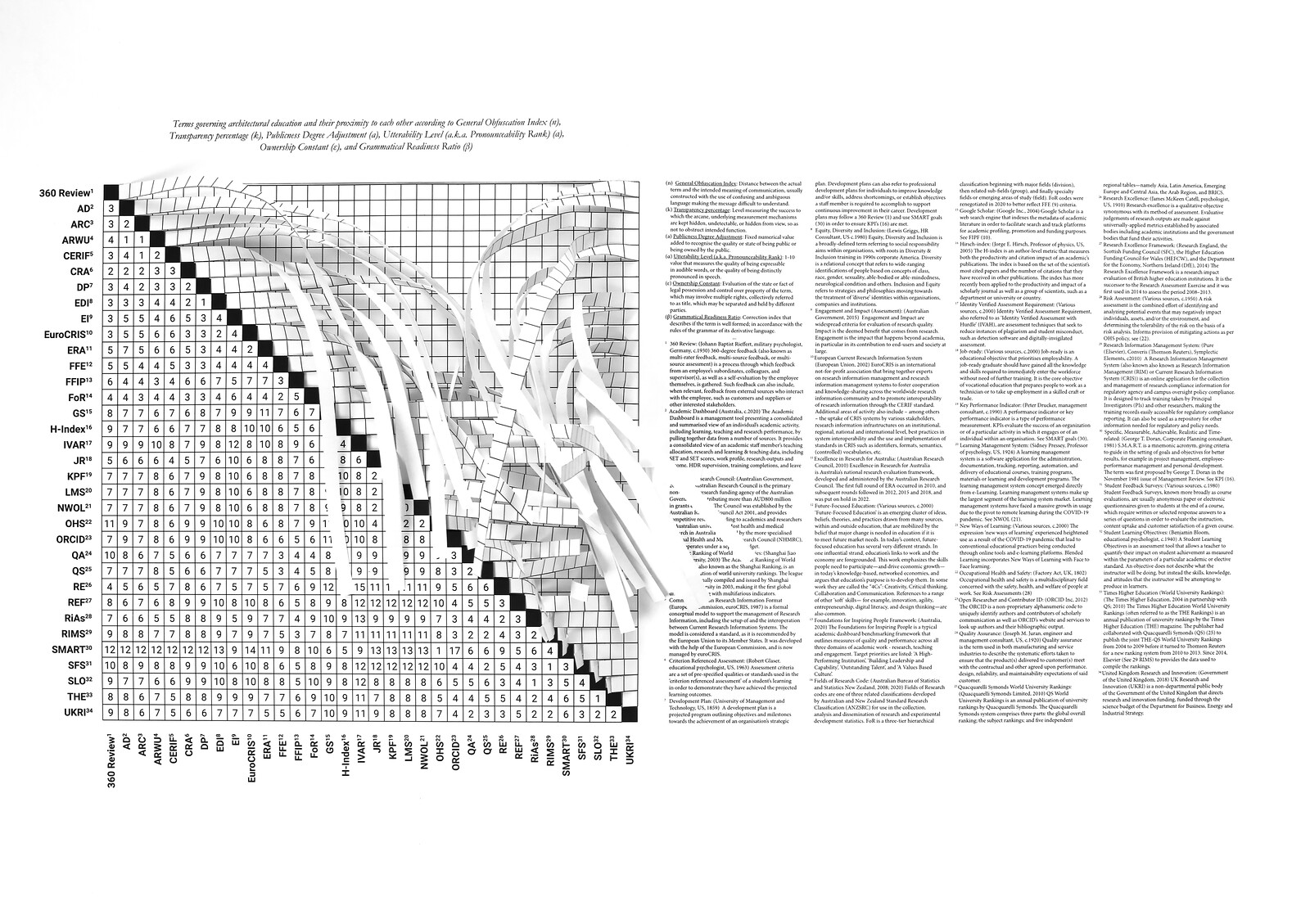

Digital technologies are now a fact of life; they are part to almost everything we do. Any architect sketching the layout of a parking lot these days is likely using more electronic computation than Frank Gehry did in the 1990s to design the Guggenheim Bilbao. As always, technical innovation allows us to keep doing what we always did, but faster or for cheaper; which is a good enough reason for technical change to be happening at all. In the case of building and construction, costs and speed of delivery are of capital importance for economists, politicians, developers, as well as for society at large. Yet for a design historian this purely quantitative use of digital technologies is only marginally relevant. If we look at buildings as architects, what matters is not so much what digital technologies can do, but what we could not do without them. This is the critical component of innovation, and only an enquiry into this creative leap may help us understand why and how digital tools have changed the way architecture is conceived and built, and the way it looks. And sure enough, after a few decades of computer-driven technical innovation, digital design theory and digitally intelligent design already constitute a significant chapter in the history of contemporary architecture—albeit at the time of writing, a chapter still mostly unwritten (with some notable exceptions that will be mentioned in the bibliographic footnote, appended). I have myself covered parts of this storyline in some of my publications, and more is in my last book, just published; when my friends at the Jencks Foundation and e-flux Architecture asked me to tell this story in a few thousand words, and have it shown as a diagram, in the great Charles Jencks tradition, I gladly took up the challenge.

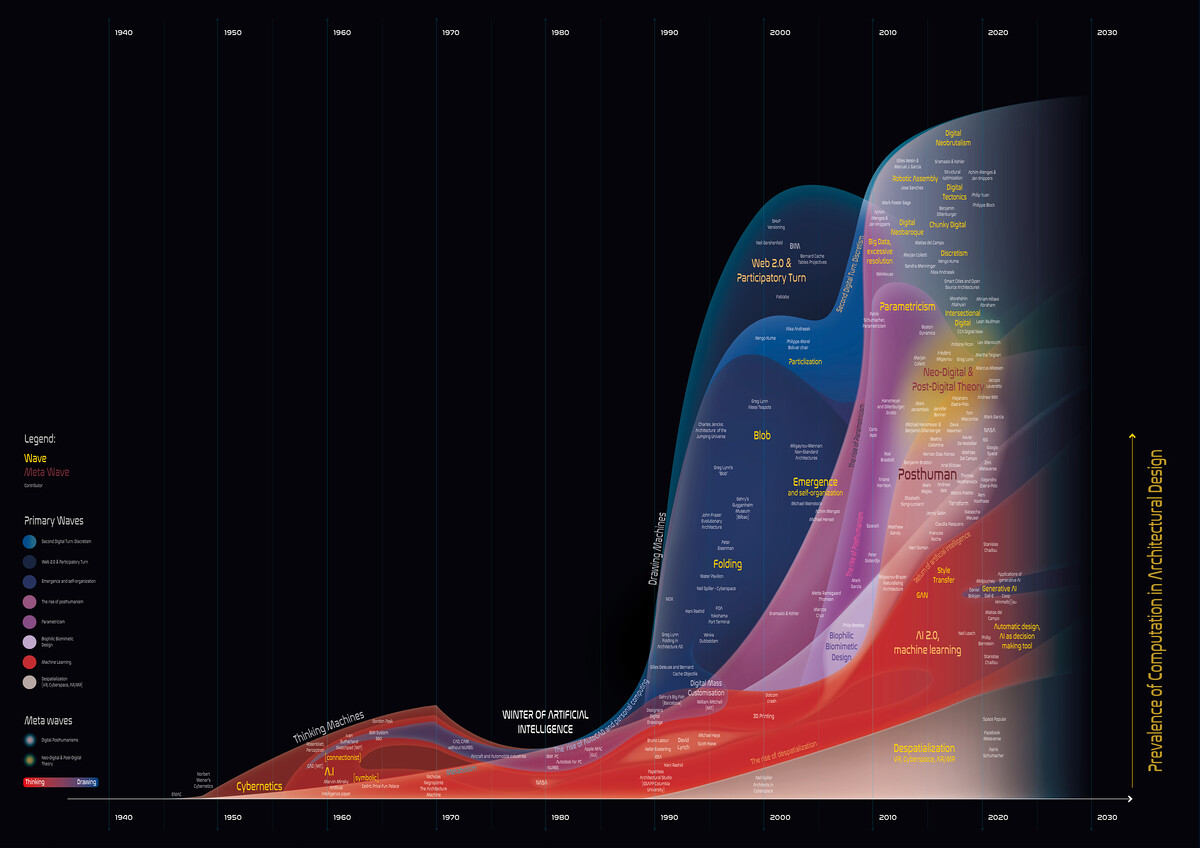

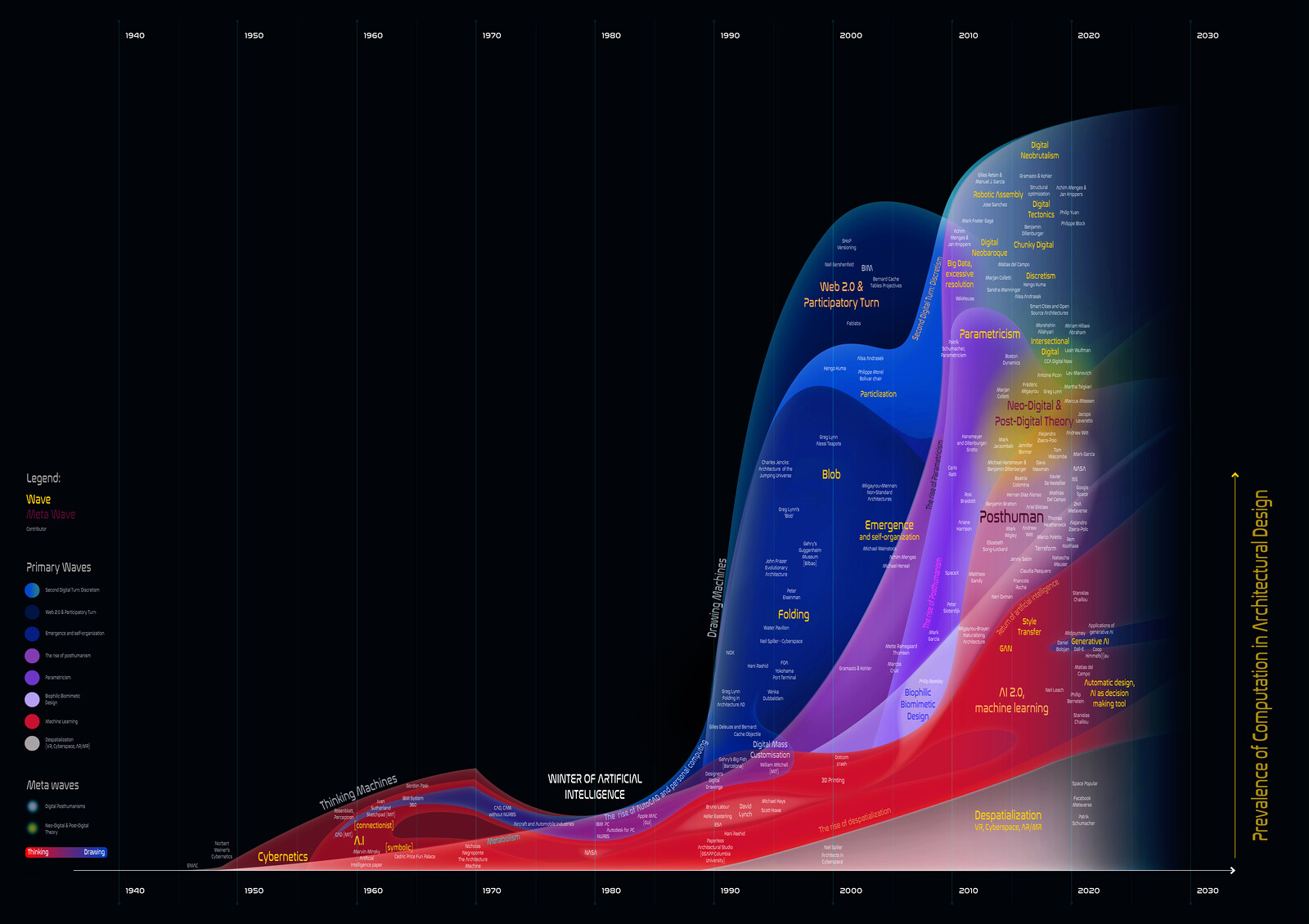

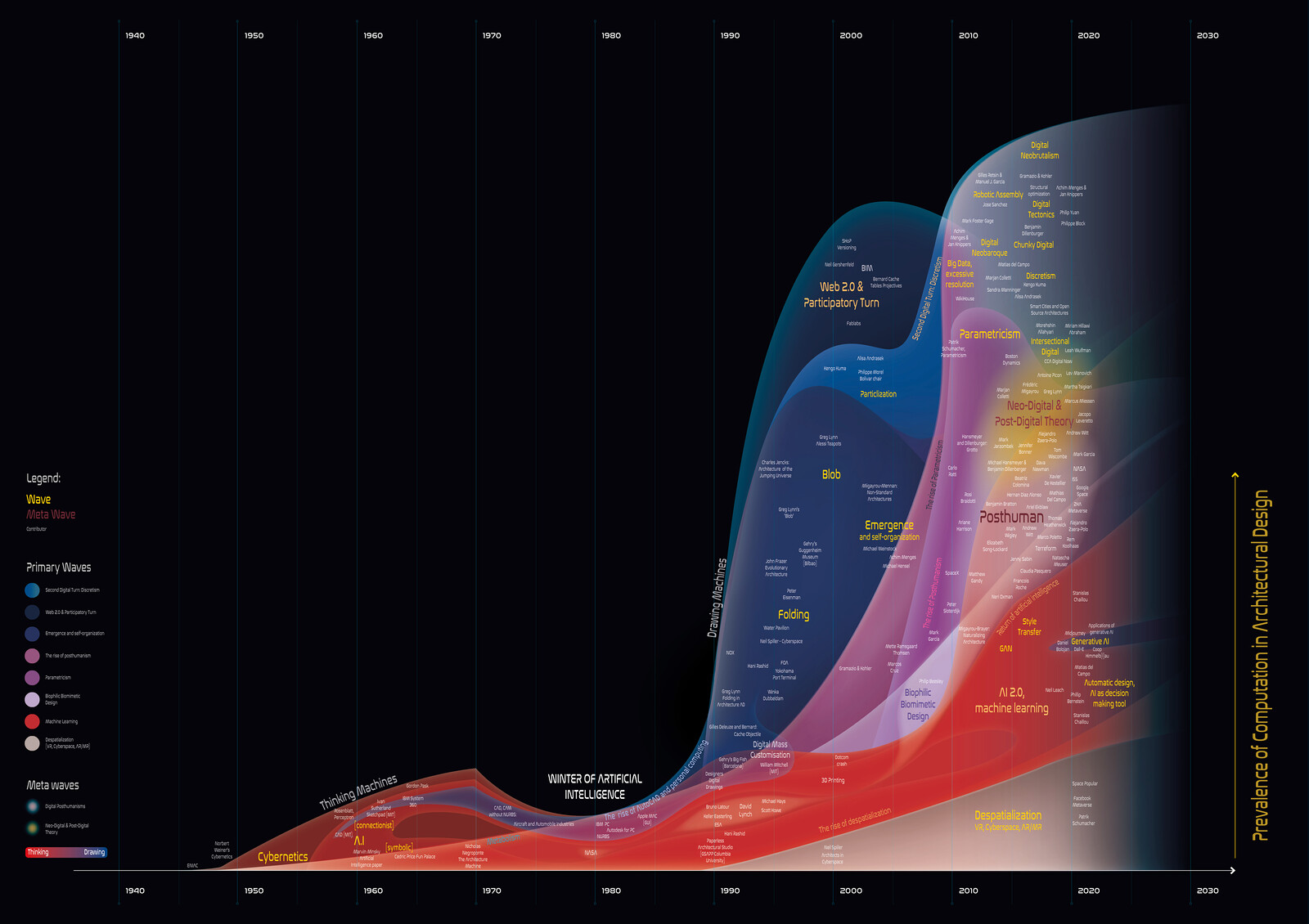

The diagram shown here was drawn by Mark Garcia and Steven Hutt, based on my text and other sources. As it derives from a purely historiographic narrative, and it builds on a linear timeline, it should be called a chronographic historiogram, rather than a conceptual diagram; its successive “waves” in different colors, getting taller over time, suggest the growing importance of various computational tools and theories of architectural design, from the invention of electronic computers to their current ubiquity. But if the role of digital tools in design grew formidably over time, their rise to pre-eminence was far from steady, as periods of technological exuberance were followed by period of technological retrenchment (booms and busts), and digital tools and theories rose and fell with the ebb and flow of the economy and of design culture, following the twists and turns, and occasionally the fads, of technical innovation. This is the story I shall tell in my piece; where however, due to brevity, I shall focus on the main ideas driving the “waves” and “metawaves” (or whirls and turbulences among waves) shown in the diagram—to the detriment of names and places; a few more names I could not mention in the text are shown in the diagram.

1. False Starts

The first computers in the modern sense of the term (as imagined by Alan Turing in 1936) were built during World War II. The famous ENIAC began operation in 1946: it weighed twenty-six tons and covered an area of 127 square meters in the building of the School of Electrical Engineering of the University of Pennsylvania in Philadelphia. It performed additions, subtractions, multiplications, and divisions, but—and this was the ground-breaking novelty—in every programmable sequence. Computers got smaller and cheaper, but not necessarily more powerful, after the introduction of transistors during the 1950s. Mainframe computers priced for middle-size companies and professional offices started to be available as of the late fifties, but a mass-market breakthrough came only with the IBM System/360, launched with great fanfare on April 7, 1964. Later in the decade its most advanced models had the equivalent of around one-five-hundredth of the RAM memory we find in most cellphones today.

A low added-value professional service dealing with complex problems and data-heavy images and drawings, architecture did not directly partake in the first age of electronic computing. Throughout the 1960s and 1970s there was next to nothing that architects and designers could actually have done with computers in the daily practice of their trade, if they could have afforded to buy one—which they couldn’t. Pictures, when translated into numbers, become big files. This is still occasionally a problem today; sixty years ago, it was insurmountable. The first to use computers for design purposes were not architects, nor designers, but mechanical engineers; the expression “Computer-Aided Design,” or CAD, was first adopted in 1959 by a new research program in the Department of Mechanical Engineering of the MIT dedicated to the development of numerically controlled milling machines. Ivan Sutherland’s futuristic Sketchpad, developed at MIT around 1963, only permitted laborious manipulation of elementary geometric diagrams, but its drawings were inputted by the touch of a “light pen” on an interactive CRT screen (a TV screen), and the apparent magic of the operation made a sensation. In this context, and given the pervasive technological exuberance and the techno-utopian optimism of the 1960s, a number of ideas derived from early computer science were adopted and embraced by some architectural avantgardes of the time; ideas and theories, but not the machines themselves, which architects at the time would not have known what to make of, nor what to do with.

Among the best-known technophilic designers of the time, Cedric Price was famously seduced by the cybernetic theories of Norbert Wiener. At its origins, Wiener’s cybernetics (1948) was a general theory of feedback and interaction between people and machines, originating from Wiener’s wartime studies of autocorrecting servomechanisms. Price derived from this the quirky idea that an intelligent building should be capable of reorganizing itself—dismantling and rebuilding itself as needed—based on use and preferences of its inhabitants, through a system of mechanical movements driven by an electronic brain. Various versions of this idea cross all of Cedric Price’s (unbuilt) work; his Fun Palace (1963–1967), with mobile walls and ceilings and automatically reconfigurable spaces, inspired Renzo Piano’s and Richard Rogers’s Centre Pompidou (1970–1977), where the only parts in motion, however, were the elevators—and a monumental external escalator.

The first theories of artificial intelligence (and the term itself) date back to the now famous Dartmouth College summer seminar of 1956. In a seminal paper written in 1960, Steps Toward Artificial Intelligence, Marvin Minsky defined artificial intelligence as a “general problem solving machine,” and imagined the training of artificial intelligence in ways eerily similar to what today we would call machine learning (sans today’s computing power). That was the beginning of a style of artificial intelligence called “connectionist,” and sometimes “neural,” which Minsky eventually made his enemy of choice and devoted the rest of his life to demoting—starting with his notorious aversion to Frank Rosenblatt’s Perceptron (circa 1958), an electronic device with learning skills purportedly modeled on the physiology of the human brain.

A few years later, back at MIT, a very young Nicholas Negroponte wrote a computer program that was meant, simply, to replace architects. Possibly under the influence of Minsky’s own change of tack, Negroponte’s automatic architect was not supposed to learn from experience; to the contrary, a compendium of formalized architectural lore was instilled and installed in its memory from the start. Based on this encyclopedia, conveniently translated into sets of rules, the machine would offer to each end-user an interactive menu of multiple choices that would drive the design process from start to finish. This style of artificial intelligence is known today as “symbolic,” “expert,” knowledge-based, or rule-based, and it remained the dominant mode of artificial intelligence after the demise of the early connectionist wave and almost to this day (but, to be noted, today’s second coming of artificial intelligence has been powered by a rerun of the “connectionist” or “neural” mode of machine learning.) Be that as it may, Negroponte’s contraption was built and tested and never worked. Negroponte himself published memorable instances of its failures in his best-selling The Architecture Machine (1970).

Negroponte’s 1970 fiasco was itself emblematic of the spirit of the time. Many projects of early cybernetic and artificial intelligence in the 1960s promised more than the technology of the time could deliver, and in the early 1970s the larger scientific community started to have second thoughts on their viability. As disillusionment set in, funding and research grants (particularly from the military) soon dried up; this was the beginning of the period known in the annals of computer science as “the winter of Artificial Intelligence.” For a number of reasons, political as well as economic, and amplified by the energy crises of 1973 and 1979, the social perception of technology quickly changed too. As the new decade progressed, the technological optimism of the 1960s gave way to technological doom and gloom. While computer science went into hibernation (and many computer scientists found new jobs in consumer electronics), the architects’ fling with the first age of electronics was quickly obliterated by the postmodern leviathan. In architecture, PoMo technophobia relegated most cyber-theories and artificial intelligence dreams of the 1960s to the dustbin of design history, and the entire high-tech panoply of the 1960s and the early 1970s simply vanished from architectural culture and architectural schools around the world—more than oblivion, a sudden and total erasure of memory. Throughout the 1970s and 1980s, while architects looked the other way, computer-aided design and computer-driven manufacturing tools were being quietly, but effectively, adopted by the aircraft and automobile industries. But architects neither cared nor knew that back then—and they would not find out until much later.

2. Real Beginnings

Technologists and futurologists of the 1950s and 1960s had anticipated a technical revolution driven by bigger and ever more powerful mainframe computers. Instead, when the computing revolution came, it was due to a proliferation of much smaller, cheaper machines. These new, affordable “personal” computers (PCs) could not do much—the first, famously, did almost nothing—but their computing power was suddenly and unexpectedly put at everyone’s disposal, on almost everyone’s desktop. The IBM PC, based on Microsoft’s disk operating system (MS-DOS) was launched in 1981, and Steve Jobs’s first Macintosh, with its mandatory graphic user interface, in 1984. The first AutoCAD software (affordable CAD that could run on MS-DOS machines) was released by Autodesk in December 1982, and by the end of the decade increasingly affordable workstations (and soon thereafter, even desktop PCs) could already manipulate relatively heavy, pixel-rich and realistic images.

This is when architects and designers realized that PCs, lousy thinking machines as they were—in fact, they were not thinking machines at all—could easily be turned into excellent drawing machines. Designers then didn’t even try to use PCs to solve design problems or to find design solutions. That had been the ambition of early artificial intelligence—and that’s the plan that failed so spectacularly in the 1960s and was jettisoned in the 1970s. Instead, in the early 1990s designers started to use computers to make drawings. They did so without any reference to cybernetics or computer science. They looked at computer-aided drawings in the way architects look at architectural drawings: through the lenses of their expertise, discipline, and design theories. This is when, after the false start and multiple dead-ends of the 1960s, computers started to change architecture for good: as machines to make drawings, not as machines to solve problems.

Equally importantly, while CAD was being extensively adopted as a pedestrian but effective cost-saving device to draft, file, retrieve, and edit blueprints in a new electronic format, some started to realize that computers could also be used to create new kinds of drawings—drawings that would have been difficult or perhaps impossible to draw by hand (as many designers of the deconstructivist wave would soon find out). The first seeds of this idea may have been sown by the team of young designers, theoreticians, artists and technologists that met in or around the Paperless Studio, created at the Graduate School of Architecture, Planning, and Preservation of Columbia University in the early 1990s by its recently appointed Dean Bernard Tschumi.

In 1993 one of them, twenty-nine-year-old Greg Lynn, published the first manifesto of the new digital avant-garde: an issue of AD (Architectural Design) titled “Folding in Architecture,” prominently including recent work of Peter Eisenman. The “fold” in the title referred to a book by Gilles Deleuze (The Fold: Leibniz and the Baroque, first published in French in 1988), which contained a few pages of architectural theory attributed by Deleuze to his gifted student, the architect and polymath Bernard Cache. Deleuze’s “fold” was, literally, the point of inflection in the graph of a continuous function, otherwise defined analytically by way of the maxima and minima of first derivatives, but for reasons unknown, and which deserve further scrutiny, Deleuze attributed to this staple of differential calculus vast and profound ontological and aesthetic meanings. Deleuze’s philosophical enquiry addressed, in very abstract terms, the simultaneous invention of the “fold” in mathematics and in the arts (painting, sculpture, architecture) in the age of the baroque. But in the architectural culture of the early 1990s Deleuze’s bizarre infatuation with the smooth transition from convexity to concavity in an S-shaped curve would prove contagious, as it came to define the shape, or style, of the first wave of digitally intelligent design.

Deleuze’s aura, formidable as it was at the time, could not alone have managed that. A concomitant and possibly determinant cause was the arrival on the market of a new family of CAD software, which allowed the intuitive manipulation of a very special family of continuous curves, called “splines.” Hand-made splines had been used for centuries for streamlining the hull of boats, and spline-modeling software had been used by car and aircraft makers since the 1970s for similar aerodynamic purposes. New, affordable, and user-friendly CAD software that became available in the early 1990s put streamlining within the reach of every designer, and digital streamlining soon became so ubiquitous and pervasive that many started to see it as an almost inevitable attribute of digital design. Mathematical splines are continuous, differentiable functions, and through one of those felicitous blunders that often have the power to change the history of ideas, spline-based, aerodynamic curves and surfaces came to be seen as the digital epiphany of the Deleuzian Fold. Gilles Deleuze himself, who died in November 1995, was not consulted on this matter. For what we know of him, chances are that Deleuze had very little interest in car design, streamlining, and aerodynamics in general.

Yet at that point the cat was out of the bag: the first practical utilization in architecture of CATIA, the now celebrated spline-modeling software developed by French aircraft manufacturer Dassault, was for the construction of a big fish, hovering over the central beach of Barcelona (Frank Gehry, 1992). Since then, and particularly after the inauguration of his Guggenheim Bilbao (1997), Gehry has been seen as the worldwide specialist of digital streamlining in architecture. In 1996 Greg Lynn introduced the term “blob” to define the new style of digital curviness; more recently, Patrik Schumacher popularized the term “parametric” (in common parlance sometimes referred to parametric scripting, in the stricter technical sense, sometimes more generally to the visual style of digital streamlining). From the end of the 1990s, and to some extent into the present, digital streamlining has been seen as the outward and visible sign of the first digital turn in architecture: the image of a new architecture that until a few years ago, without digital techniques, would have been impossible—or almost impossible—to design and build.

Despite its recent wane, the popularity of digital streamlining—in the 1990s and beyond— belies and obfuscates the importance of the technology underpinning it, which was and still is inherent in the digital mode of production, regardless of form and style. Scripted code for digital design and fabrication uses mathematical notations where variations in the values of some parameters, or coefficients, can generate “families” of objects—all individually different yet sharing the same syntax of the original script. Due this commonality of code, these “families” of objects may also share some visible features, and look similar to one another. Back in 1988 Deleuze and Cache called this new type of generic technical object an “objectile,” and Deleuze’s and Cache’s objectile—a set or range of different phenotypes with some code, or genotype, in common—remains to this day the most pertinent description of the new technical object of the digital age. Early in the 1990s Greg Lynn and others came to very similar definitions of continuous parametric variations in design and fabrication.

This was the mathematical and technical basis of what we now call digital mass-customization: the discovery of a non-standard mode of digital design and fabrication, where individual product variations can be serially manufactured in a fully digital workflow (CAD-CAM) at no supplemental cost. Digital mass-customization is not only a fundamentally anti-modern idea—in so far as it upends all the socio-technical principles of industrial modernity; it is also the long-postponed fulfilment of a core aspiration of postmodernism: a postmodern dream come true, in a sense, courtesy of digital technologies. Digital mass-customization may deliver variations for variations’ sake—in theory, at no cost. This is a plan that Charles Jencks could have endorsed—and in fact did, starting with his capital but often misunderstood The Architecture of the Jumping Universe (1995–97). And in so far as it is animated by a powerful anti-modern creed, this is also a conceptual framework that Gilles Deleuze might have found congenial—indeed, excepting the technological part of the plan, which was mostly due to Bernard Cache, this is an idea that can be traced back to him. The idea of digital mass-customization was conceived, theorized, investigated, and tested by architects and designers before anyone else did. It is also one of the most momentous, revolutionary ideas that architects and designers ever came up with. Standardization, which in the industrial mode of production reduces costs, in a digital mode of production only reduces the choices made available to the designer.

Digital mass-customization, or non-standard seriality, is a new way of making; a technical logic quintessentially inherent in all modes of digital design and fabrication, regardless of form or style. In a parallel and equally disruptive development, consistent with but partly independent from the tectonic arguments related to the early theories of CAD-CAM, digital design culture in the 1990s also started to address the urban, economic, and social consequences of the migration of human activities from physical space to virtual reality, the internet, or “cyberspace”—a trend then often called “despatialization.” The late William Mitchell, then Dean of the School of Architecture and Planning at MIT, was one of most lucid theoreticians of the new, “despatialized,” internet-driven economy, which he saw as forthcoming. One generation later, the premises of Mitchell’s visionary, almost prophetic body of work have been vindicated by the experience of the pandemic, and old models of virtual reality and vintage “cyberspatial” experiments are now being boisterously revived by Facebook’s Metaverse, among others.

3. The Second Digital Turn

Early in the new millennium, in the wake of the dot-com crash (2001) and in the more contrite environment that followed 9/11 and the start of the second Gulf War (2003), the first wave of digital streamlining (or blob making) abated and a new emphasis on process, rather than on form, lead many digital designers to probe the participatory nature of digital parametricism—and of digital mass-customization more in general. A new spirit and some new technologies, which could exploit the infrastructural overinvestment of the 1990s at a discount, led to what was then called the Web 2.0: the participatory Web, based on collaboration, interactivity, crowdsourcing, and user-generated content. It then became evident that the open-endedness of parametric scripting inevitably challenges the authorial mode of design by notation that Western architects have adopted and advocated since the Italian Renaissance. If the same parametric script can generate a theoretically infinite range of variations, who will choose one variation or another? Bernard Cache’s Tables Projectives (2004–2005) tried to exploit the technical logic of digital parametricism and of CAD-CAM (digital design to manufacturing) to invite—in fact, to oblige—the end-user to become the co-designer of a physical object, thus reviving in part Negroponte’s cybernetic visions of the late 1960s. With different social purposes and ideological intent, similar principles have been tested by the FabLab Movement (originating from Neil Gershenfeld’s MIT teaching, circa 2001) or by the WikiHouse Foundation (2011), among others. But the meteoric rise of so-called social media, from Facebook to Wikipedia, was not matched by any comparable development in digital design. Rooted in the modern tradition of the architect as a humanist artist, the design professions showed limited interest in a techno-cultural development that many feared could limit their authorial remit. Meanwhile, the most lasting legacy of the spirit of the Web 2.0 in architecture goes under the generic name of Building Information Modeling (BIM), a family of corporate CAD-CAM software meant to facilitate collaboration between designers and contractors, favorably embraced by the building and construction industry and some government bodies but often reviled by the creative professions and academia.

Another reaction against the formal fluidity of digital streamlining that took shape as of the early 2000s may have been originally prompted by some technical developments, but went on to acquire deeper aesthetical, ideological, and even socio-political significations. Early in the new millennium 3D printing emerged as the manufacturing technology of choice for a new generation of digital designers, replacing the CNC milling machines of the 1990s. 3D printers can fabricate small cubic units of matter, called voxels, in minuscule sizes and at ever increasing resolutions. Starting with Philippe Morel’s seminal Bolivar Chair (2004), many designers confronting this new technical logic have chosen to leave each particle conspicuously visible, thus displaying the discrete logic at play. Early in the new millennium new methods of structural analysis, such as Finite Element Analysis, and new mathematical tools, such as Cellular Automata, similarly based on a discrete logic of computation, also became popular among designers. Discrete math, implying as it does enormous amounts of uncompressible raw data, is not human-friendly; no human can notate, calculate, or even fabricate, for that matter, one trillion voxels one by one. But this is exactly the kind of work that computers can do, thanks to their super-human computing speed, memory, and processing power. Discrete math is made for computers, not for us.

In architecture, the most conspicuous manifestation of computational discretism, and of the “big data” technical logic underpinning it, is found in objects that display an unprecedented level of formal exuberance. Among the best examples of this new digital style (sometimes called “the second digital style,” or the style of the second digital turn) is Digital Grotesque I (2013) by Michael Hansmeyer and Benjamin Dillenburger, a walk-in grotto composed of an extraordinary number of voxels, each of which can be seen as an individually designed, calculated, constructed, and positioned micro-brick. The disquieting or even hostile aesthetic of these creations transcends our ability to apprehend and comprehend them, reflecting an already post-human logic at play, conspicuously at odds with the organic, “small-data” logic of our mind. Traces of a similarly “excessive” visual resolution are also found in recent works by Marjan Colletti, Matias del Campo and Sandra Manninger, Mark Foster Gage, Alisa Andrasek, and—with different premises—also in the “particlized” style of Kengo Kuma. Claudia Pasquero, Marco Poletto, Neri Oxman, and others have added forceful biophilic and biomimetic inspiration; in the case of Jenny Sabin, with direct reference to biology and medical research. But the aesthetic of the digital discrete now seems to be spreading outside the rarified circles of the digital avant-garde, asserting itself as one of the generic stylistic currents of our time, without any direct reference to digital techniques (see, for example, some recent works of Sou Fujimoto); the style of excessive resolution, often invoked as the harbinger of a new “post-human” aesthetic, has also been critiqued using categories as varied as the modernist theory of estrangement (Entfremdung), or the late-classical and Romantic tradition of the sublime.

Of course the production of objects composed of an enormous number of components (all the same or all different or anything in between) brings the problem of their assembly to the fore. This was an insurmountable problem until recently, because the cost of bespoke, manual assembly would have been prohibitive, while the industrial robots that have been in use since the 1960s, designed for the automation of repetitive motions in factory-like environments, cannot deal with the irregular, improvised, and at times unpredictable operations that are the daily lot of building and construction work. This is why in recent years various digital creators have strived to develop a new generation of post-industrial robots, so to speak: intelligent robots that are able to emulate some artisanal operations. Pioneers in this field include Fabio Gramazio and Matthias Kohler at the ETH in Zurich, who starting in the early 2000s have demonstrated that traditional industrial robots can be reprogrammed to carry out the automatic laying of bricks in compositions of all kinds, including irregular ones. The group of Achim Menges and Jan Knippers at the University of Stuttgart specializes in the development of “adaptive” or versatile robots, capable of altering robotic operations in response to unexpected circumstances, and thus to work with natural, non-standardized materials (non-industrial wood, in particular) or new composite materials having unpredictable (non-linear) structural performance.

This line of research follows from early experiments on material self-organization, carried out by the Emergence and Design Group (Achim Menges, Michael Hensel, and Michael Weinstock) at the Architectural Association in London in the early 2000s. Their influential publications (in particular their 2004 issue of AD, “Emergence: Morphogenetic Design Strategies”) helped popularize ideas and themes of complexity science that have since become pervasive in many areas of computational design. Gilles Retsin and Manuel J. Garcia concentrate on the automated assembly of modular macro-components—a trend that resurfaces in the experiments of José Sanchez at the University of Southern California and elsewhere, producing an aesthetic of chunky aggregations that sometimes suggests late-mechanical precedents (this trend has also been called “digital neobrutalism”). This is not the only sign of nostalgia for the glory years of cybernetics and early artificial intelligence—nostalgia that is now widespread in architecture schools and certain circles of the digital avantgarde, often accompanied by an outspoken rejection of the neoliberal and sometimes libertarian bias associated (mostly subliminally, but sometimes explicitly) with the politics of the first digital turn and some of its protagonists. In more recent times, the growing alertness of the digital design community to today’s environmental concerns has furthered the disconnection between the digital pioneers of the 1990s and a younger generation of digital creators.

4. AI Reborn

To some extent, the current revival of interest in artificial intelligence (now often capitalized and called AI, or rebranded, metonymically, as “machine learning”) is warranted: due to the immense memory and processing power of today’s computers, some of the methods of vintage artificial intelligence, as intuited by Minsky and others in the late 1950s and early 1960s, appear now as increasingly viable. Unlike early artificial intelligence, which famously never worked, today’s AI often works, sometimes surprisingly well: computers can now play chess, translate texts in natural languages, and even drive cars (sort of). So the pristine modernist dream of artificial intelligence as a “general problem solving machine” seems again within reach, and architects and designers are rethinking their general approach to computer-based design and fabrication. For the last thirty years computers have been a stupendous but always ancillary drawing machine that architects could use at will to notate, calculate, and build unprecedented forms, to mass-produce non-standard variations at no extra cost, or to enhance collaboration in a distributed, post-authorial design process. Today computers appear ready, once again, to take on or reclaim their ancestral role as thinking machines: machines that can solve design problems hence design on their own, autonomously, and to some extent in the architect’s stead. Half a century of stupendous technical progress would thus appear to have brought us back, conceptually, to where we stood around 1967.

Fortunately, not all that is happening in today’s computational design is half a century old. Among many applications of machine learning one in particular, where Generative Adversarial Networks (GAN) are used as image processing tools, has recently retained the attention of visual artists and of computational designers. The technology has been trained to recognize similarities in a corpus of images that are labeled as instantiations of the same name, or idea; and, in reverse, to create new images that illustrate some given names, or ideas. Stunning instances of the use of GANs to create brand-new architectural images, or to merge existing ones (“style transfer”) have been shown recently by Matias del Campo, Stanislas Chaillou, and others. At the time of writing Midjourney and DALL-E, AI programs that create images from textual descriptions, are the talk of architectural students around the world. All GAN generated art is for now bi-dimensional, but tri-dimensional experiments are underway—a crucial development for architectural purposes.

This apparently ludic display of computer graphics prowess touches on some core theoretical tropes of Western philosophy and art theory. Thanks to data-driven artificial intelligence we can now mass-produce endless non-identical copies of any given set of archetypes or models (never mind how they are invoked, either visually or verbally). As a welcome side effect, this use of computer-generated art has revived notions and theories of imitation in the arts, and of artistic styles, which had been eluded or repudiated by modernist art theory. Yet, at the time of this writing, the relevance of GAN technologies to contemporary architectural design still appears as somewhat limited. GAN may have successfully automated visual imitation—a giant leap for the history and theory of the arts. But which designer would want to borrow someone else’s intelligence (never mind if artificial) to design a building that looks like someone else’s building? A similarly cautionary note should also apply, in my opinion, to the more general issue of the practical applicability of today’s born-again artificial intelligence to architectural design.

Artificial intelligence can now reliably solve problems and make choices. But data-driven artificial intelligence solves problems by iterative optimization, and problems must be quantifiable in order to be optimizable. Consequently, the field of action of data-driven artificial intelligence as a design tool is by its very nature limited to tasks involving measurable phenomena and factors. Unfortunately, architectural design as a whole cannot be easily translated into numbers. Don’t misunderstand me: architectural drawings have been digitized for a long time; but no one has found to date a consensual metric to assess values in architectural design. Therefore, only specific subsets or tasks of each design assignment can be optimized by AI tools, insofar as they can lead to measurable outputs. And even within that limited ambit, most quantifiable problems will likely consist of more than one optimizable parameter, so someone, at some point will have to prioritize one parameter over another.

As Phillip Bernstein concludes in his recent book dedicated to machine learning in architecture, the adoption of AI in the design professions will not lead to the to the rise of a new breed of post-human designers, but to the development of more intelligent design tools. Architects and designers will have to learn to master them, if for no other reason than they are soon going to be cheapest and most effective tools of their trade. If architects choose not to use them, others will. But to imagine that a new generation of computers will be able to replace the creative work of architects (as Negroponte and others thought at the end of the 1960s, and some again think today) does not seem either useful or intellectually relevant—at least, not for the time being, nor for some time to come.

Bibliographical note

The rise of industrial CAD and of “cybernetic” architecture in the 1960s and early 1970s has been recently the subject of excellent scholarly studies: see in particular Molly Steenson, Architectural Intelligence: How Designers and Architects Created the Digital Landscape (Cambridge, MA: The MIT Press, 2017); Daniel Cardoso Llach, Builders of the Vision: Software and the Imagination of Design (New York, London: Routledge, 2015); Theodora Vardouli, Olga Touloumi, eds., Computer Architectures. Constructing the Common Ground (New York, London: Routledge, 2019).

The rise of digital formalism in the early 1990s has been recounted by Greg Lynn, one of its protagonists (Greg Lynn, ed., Archaeology of the Digital, Montreal: Canadian Centre for Architecture; Berlin: Sternberg Press, 2013), and theorized by Patrik Schumacher (The Autopoiesis of Architecture, I and II, London: Wiley, 2011 and 2012); more recently, a new generation of young scholars and doctoral students has started tackling the history of digital design in the 1990s: see Andrew Goodhouse, ed., When Is the Digital in Architecture, Montreal: Canadian Centre for Architecture; Berlin: Sternberg Press, 2017, Nathalie Bredella, “The Knowledge Practices of the Paperless Studio,” GAM, Graz Architecture Magazine 10 (2014): 112–127. The history and theory of BIM is recounted by Phillip Bernstein, Architecture-Design-Data: Practice Competency in the Era of Computation (Basle: Birkhäuser, 2018); for more recent developments, see Bernstein’s Machine Learning: Architecture in the Age of Artificial Intelligence (London: RIBA Publishing, 2022).

I have myself written chapters on the technical history of Bézier’s curves, on the importance of Gilles Deleuze’s philosophy for digital design theory, on the rise of digital mass-customization in the 1990s, etc.; sources for my arguments in this article can be found in particular in The Alphabet and the Algorithm (2011); The Second Digital Turn (2017) and Beyond Digital (2023), all published by the MIT Press; an earlier, much shorter draft of this article was published in Casabella 914, 10 (2020): 28–35. A comprehensive survey of the state of digital design in the early 2000s is in Antoine Picon, Digital Culture in Architecture (Basle: Birkhäuser, 2010); see also Matthew Pole and Manuel Shvartzberg, eds., The Politics of Parametricism (London: Bloomsbury, 2015). But a synoptic historiographic assessment of history of the digital turn in architecture is still missing, with the partial exceptions of a recent publication by the Architecture Museum of the Technical University of Munich (Teresa Fankhänel and Andres Lepik, eds., The Architecture Machine, Basel: Birkhäuser, 2020), derived from an exhibition held there in the winter of 2020-21. See also an excellent compendium written by Mollie Claypool for IKEA’s SPACE10, now online at ➝.

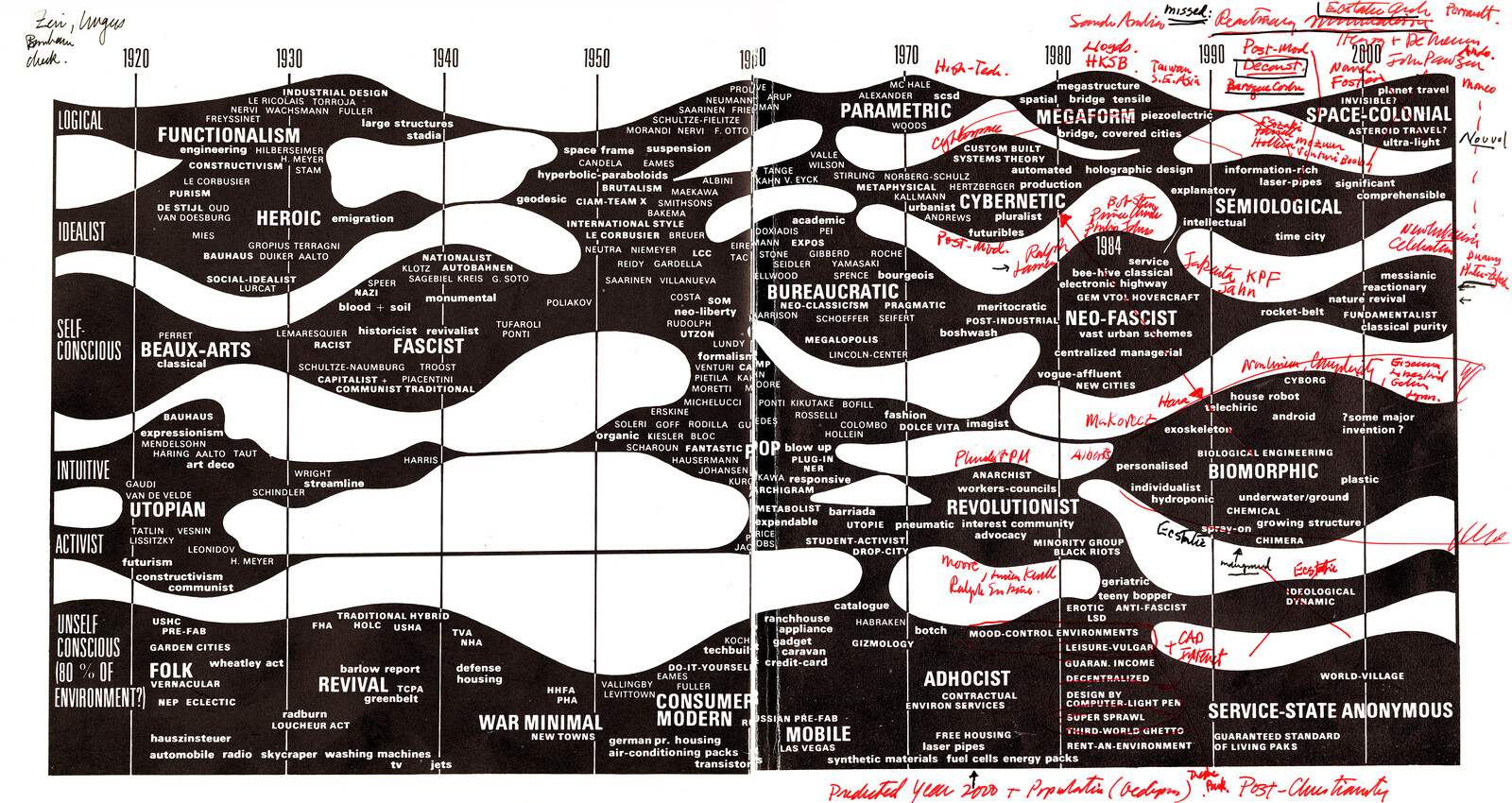

Chronograms of Architecture is a collaboration between e-flux Architecture and the Jencks Foundation within the context of their research program “‘isms and ‘wasms.”